Designing the First Generative AI Platform for Tabular Data

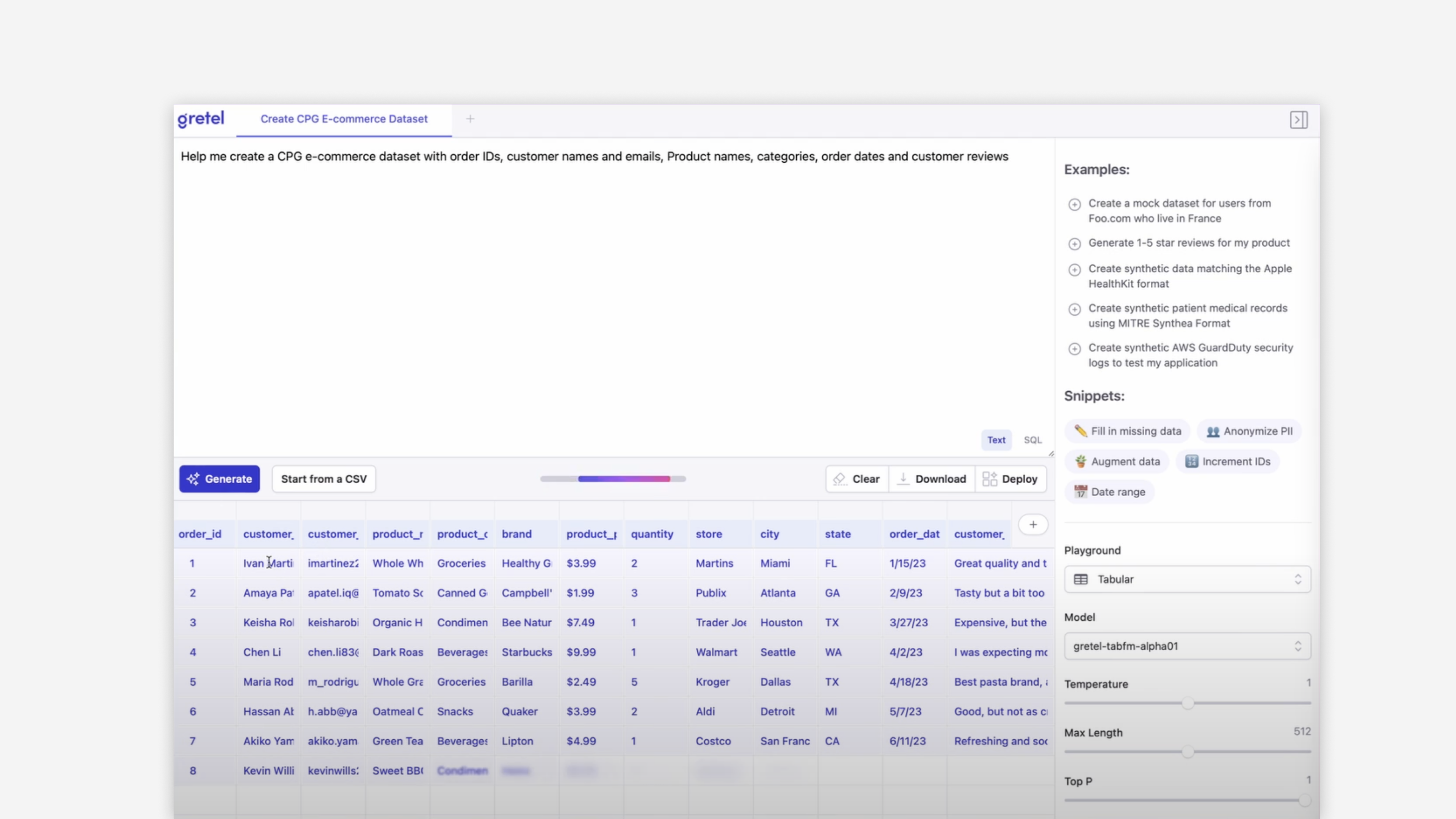

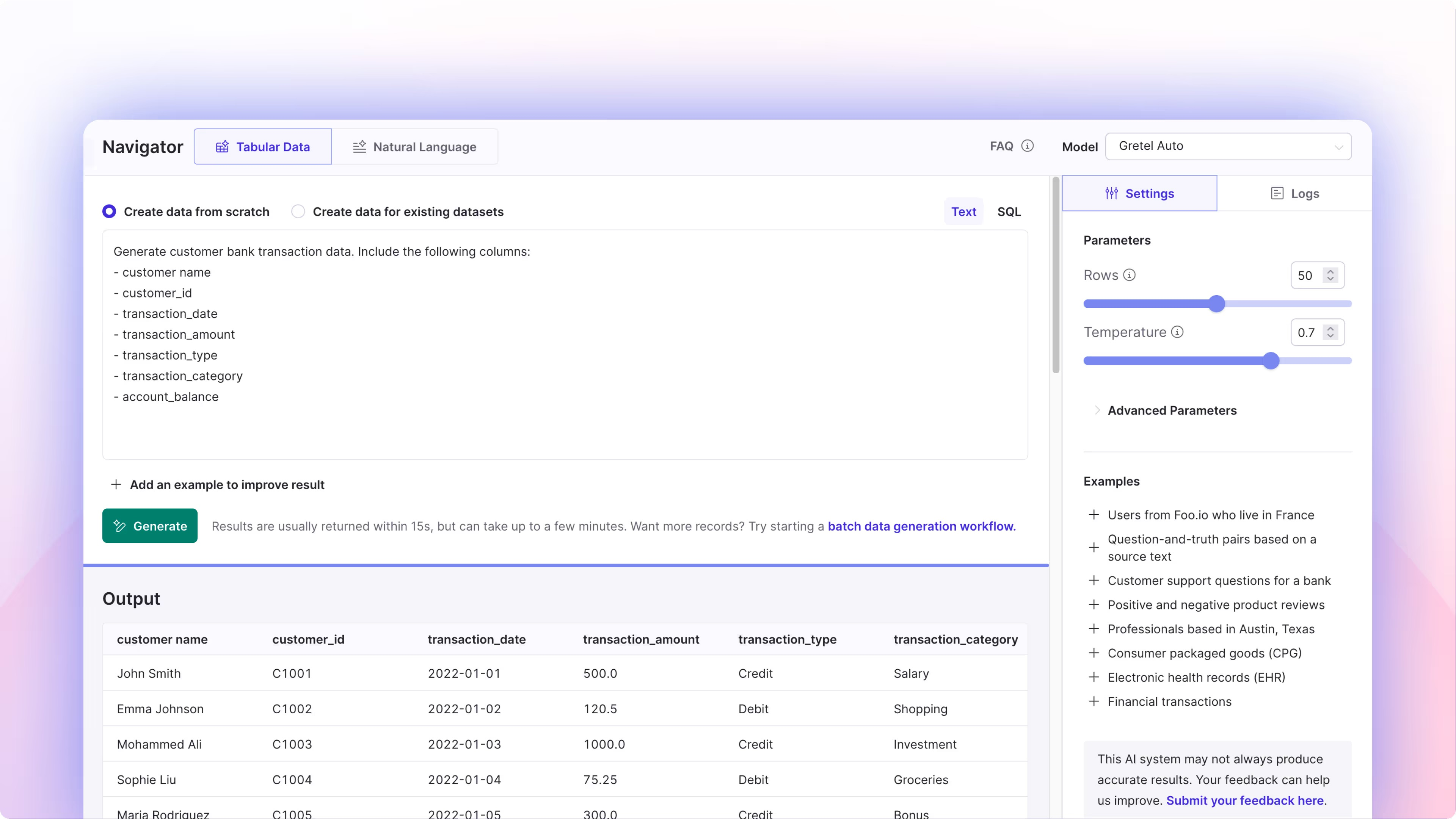

Gretel Navigator is the first generative AI platform for tabular data, enabling users to create and refine datasets interactively using prompts and real-time feedback. With no existing design patterns to reference, I developed a new research approach based on expert interviews and assumption tracking to simplify complex AI processes. Through rapid prototyping and user testing, I created an intuitive, accessible platform that significantly improved user engagement and adoption.

Intro

Designing a new product often starts with analyzing existing user behaviors and data. However, in this project, there was no prior user behavior or research to guide the design process. Instead, we had to define and refine a completely new approach while working within a tight timeline.

The product, Gretel Navigator, is the first compound AI system designed to create, edit, and augment tabular data. Unlike traditional batch synthetic data generation, Gretel Navigator enables users to iteratively build datasets from scratch with a more interactive and intuitive process.

The Goal

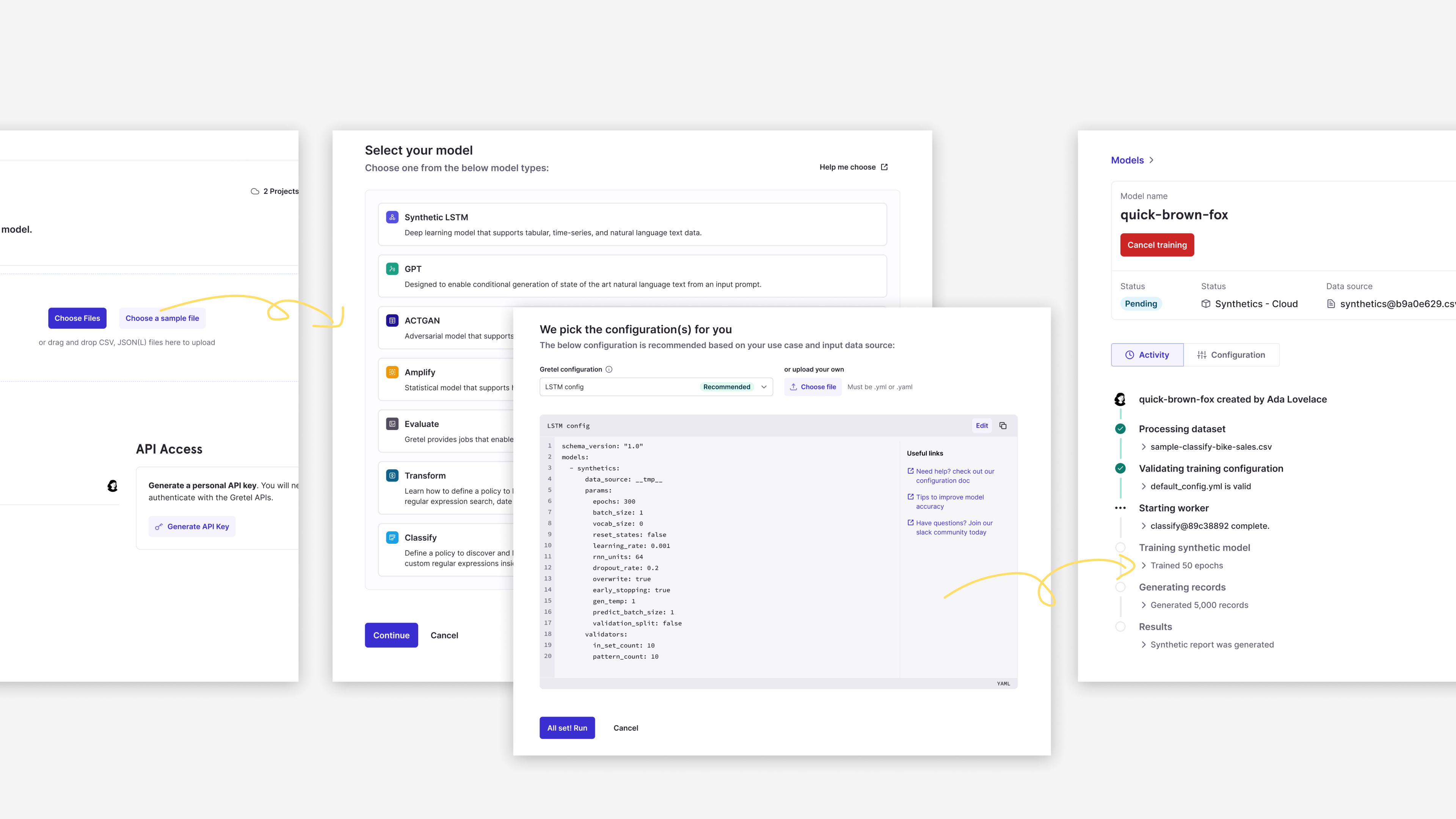

Our existing synthetic data generation process allowed users to generate large amounts of tabular data using different AI models (high-dimensional, time series, GPT-based models, etc.). The workflow was as follows:

- Step 1. Users provided existing real-world data.

- Step 2. They selected a model type.

- Step 3. The model was trained on that data.

- Step 4. The system generated synthetic datasets.

Limitations of the Process:

- Lack of visibility: Users couldn't evaluate the accuracy of their configuration settings before training the model.

- Data dependency: The process required users to have existing real-world datasets.

- Rigid process: The workflow was linear and waterfall-like. Users couldn’t make changes mid-process; they had to start over.

- High learning curve: Users needed a strong understanding of data science concepts to configure training settings correctly.

As a designer, my goal was to create an interactive and intuitive platform that empowers users to generate synthetic data easily and effectively. The platform needed to eliminate the reliance on large real-world datasets and complex configuration settings. Instead of requiring deep technical knowledge, the platform should allow users to use natural language prompts and basic settings to define their data generation needs.

Another key objective was to enable real-time interaction, so users could see the immediate impact of their inputs and refine the results iteratively. The goal was to reduce the learning curve and create a fluid experience where users could confidently explore, adjust, and optimize their data without restarting the process

Unique Research Approach

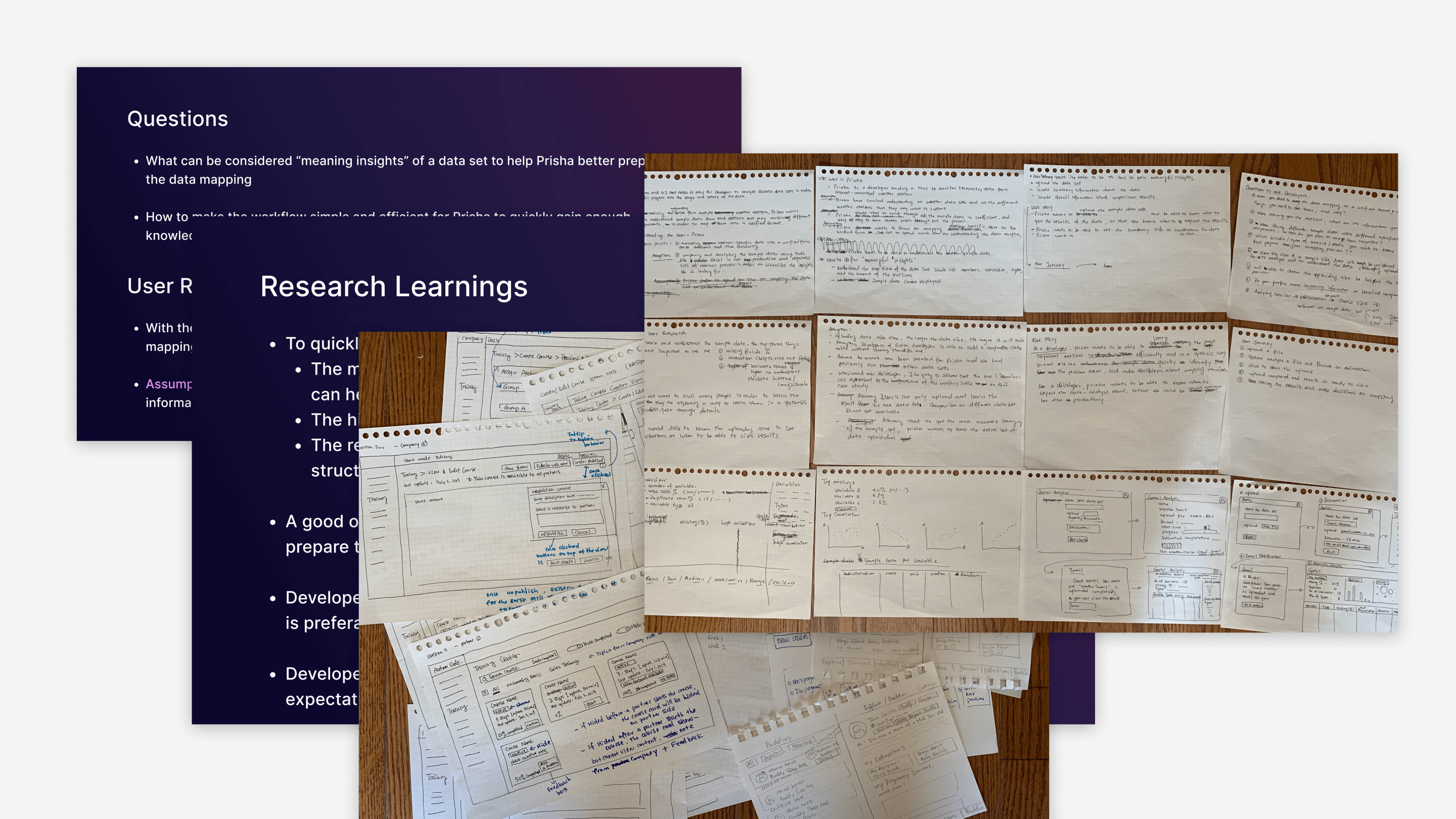

Traditional design research focuses on understanding existing user journeys and behaviors, but in this case, there was no precedent.

Through initial user interviews, we discovered that even those interested in the product had no clear understanding of what they needed. Many expected us to educate them on the possibilities and suggest the best way to use the tool.

This realization meant that traditional user research methods were insufficient. We needed to establish our own research approach from scratch.

Expert Interviews and Studies

Internally, we had a strong data science team that built our AI models. I conducted individual interviews with them to understand how they envisioned using and interacting with the product. These discussions yielded both valuable insights and significant challenges.

Key Insights:

- The tool should be accessible to non-technical users, making it easy to experiment and iterate.

- The interface should offer a seamless, low-friction experience for users trying out generative AI.

- We could be opinionated on proposed functions or use smart suggestions to guide users' journey.

Key Challenges:

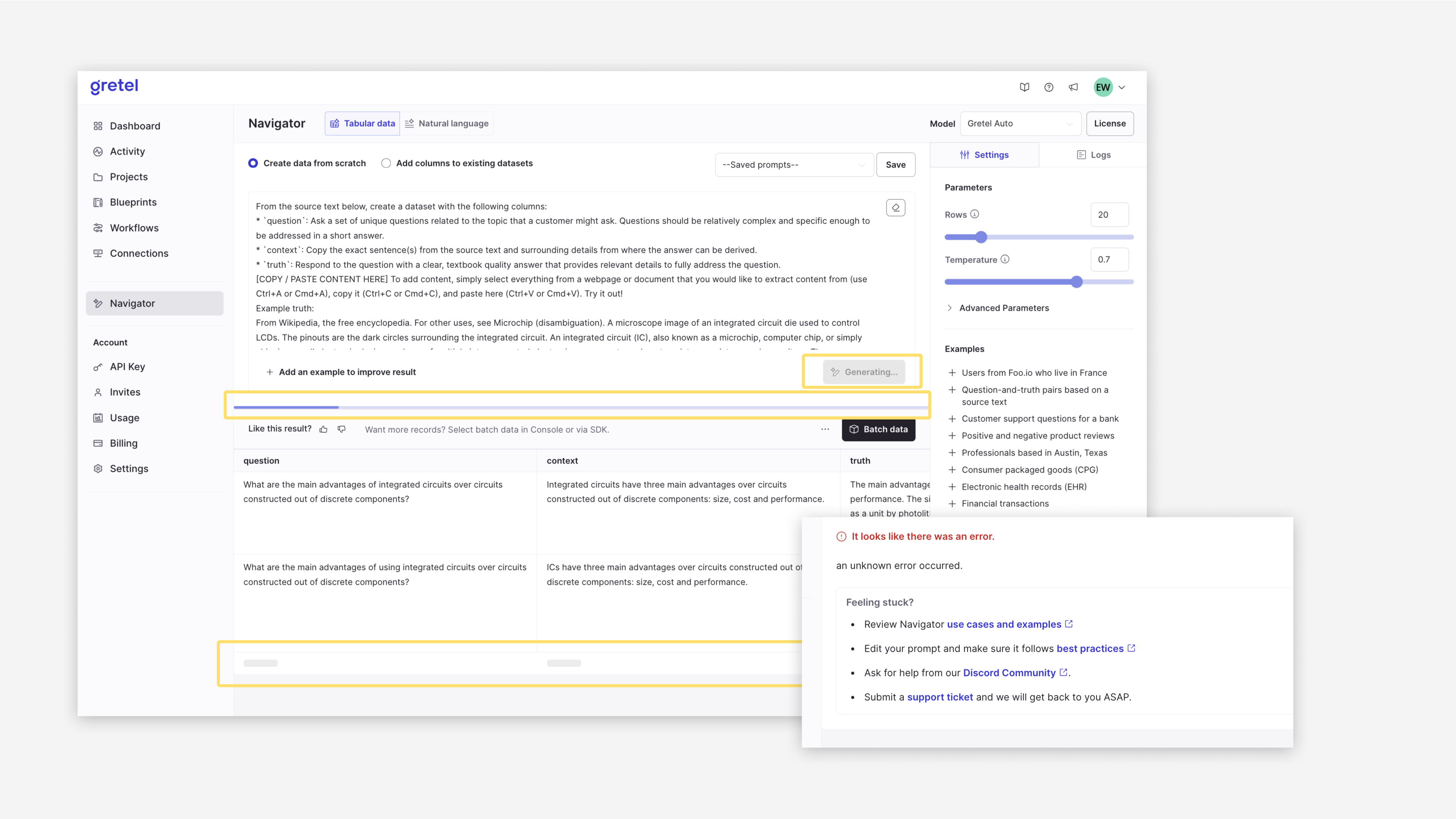

- Complexity of prompt engineering: Users needed to provide detailed prompts to guide model training effectively. Translating this technical requirement into a simple, intuitive UI was a major challenge.

- Bridging the gap between technical and non-technical users: How could we make the interface useful for both advanced users and those unfamiliar with AI models?

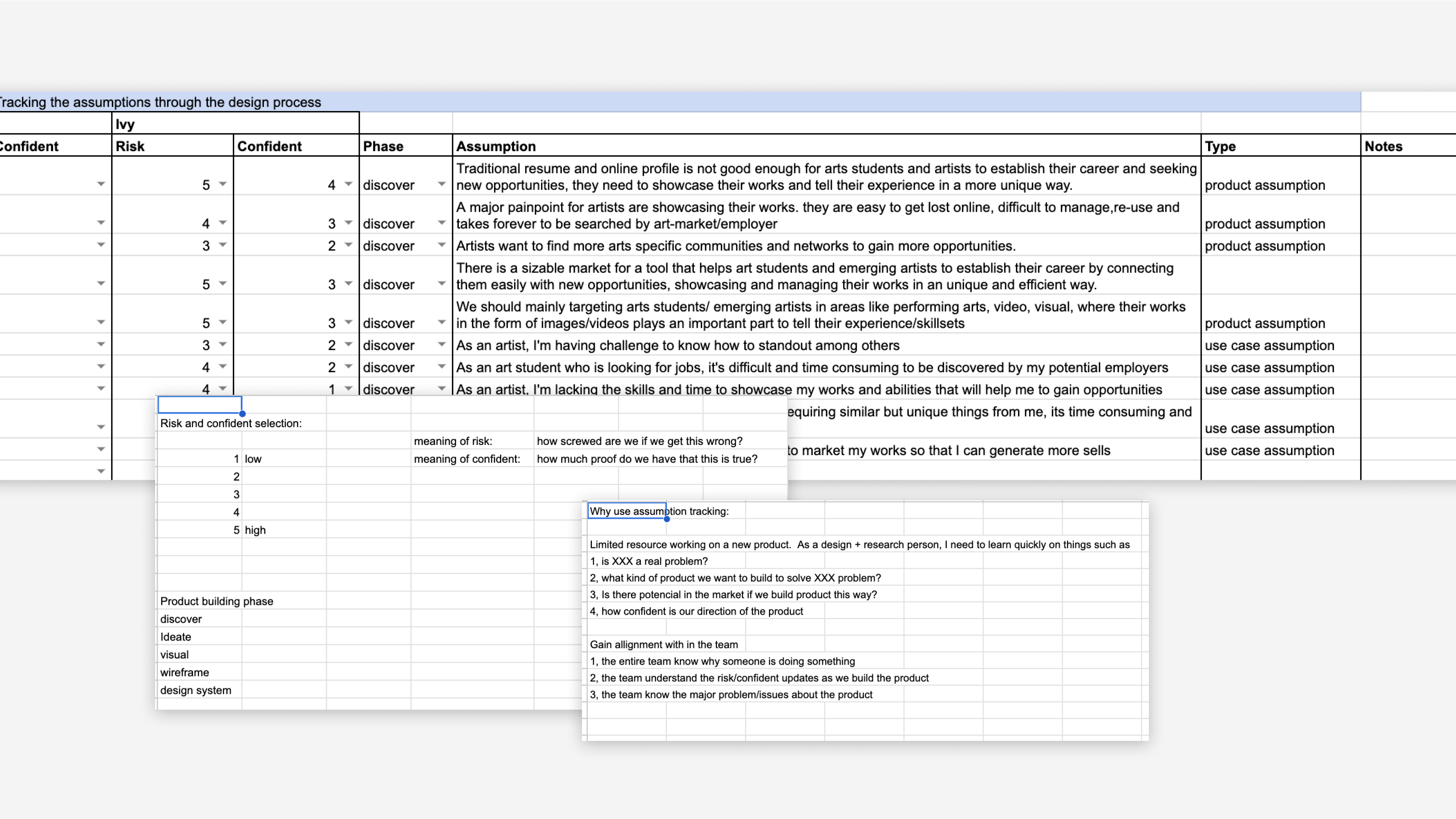

Assumption Tracking

Given the absence of historical user data, we started with educated guesses about user behavior and refined our assumptions through iterative testing. Key assumptions included:

- Users would prefer a guided experience rather than a blank canvas.

- Real-time feedback on prompts would improve usability.

- Users would be comfortable with simple AI prompts to describe the dataset they are looking for.

- Users would be confused by technical terms or language.

- Users would like to go back and forth and keep editing and trying their prompt.

- Users would not be able to find the functions if they were hidden behind dropdowns or collapsed accordions.

We continuously tested and validated these assumptions through user feedback and usability tests.

Rapid Prototyping and Design Iterations

We started prototyping at a very early stage while simultaneously establishing our assumption tracker. Continuous discussions with the team helped define the first set of core functions to be included in the initial scope.

For a quick turnaround and immediate user testing, the first prototype was built on a separate front-end code base from our existing UI. This allowed for rapid iteration and validation of backend functionality before refining the UX.

The goal of the first round of prototyping was primarily to test functionality and backend performance, with less focus on UX. Once the functionality was finalized, I transitioned to prototyping in Figma, leveraging our existing design system. We iteratively tested multiple design versions in Figma while conducting ongoing ad-hoc user interviews to refine the experience.

Navigating Differing Opinions Through Assumption Tracking

With no existing precedent to rely on, team members often had different perspectives based on their specific domain expertise. To prevent lengthy debates, we used assumption tracking as a structured way to test and resolve conflicting ideas quickly.

For example, when a team member believed adding helper text was essential to prevent user confusion, instead of engaging in extended discussions, we simply recorded it as an assumption and updated the design accordingly. We then validated or refined it based on user feedback.

This collaborative approach ensured that every team member felt heard while allowing us to make data-driven decisions efficiently, reducing friction and accelerating progress.

Consistent Visual Language and Real-Time Feedback

A key lesson from this project was that clear and consistent visual guidance significantly enhances the user experience for a new tool.

Rather than prioritizing aesthetic experimentation, we focused on simple, familiar visual patterns to create an intuitive experience. Consistency in visual language helped users understand how to navigate the platform effortlessly.

Additionally, real-time feedback was critical for user confidence. Since tabular data generation involves varying processing times, we incorporated:

- Loading indicators to set clear expectations.

- Toast messages to inform users of ongoing actions.

- Dynamic button states to reflect the status of the model.

The Next Steps

As the platform continues to evolve, we are:

- Validating or refining assumptions as more users interact with the product.

- Documenting user behavior and pain points to further enhance the experience.

- Expanding the design system to accommodate future iterations and refinements.

Final Thoughts

Designing Gretel Navigator from scratch required a fundamental shift in design thinking, moving from assumption-based decisions to a highly iterative, user-driven process. Without prior user behaviors to analyze, we defined a novel research approach, continuously tested hypotheses, and rapidly iterated to create an intuitive AI-powered platform for synthetic data generation.

The first round of user testing showed positive results, proving that our approach was a step in the right direction.

This project highlights the power of cross-functional collaboration, assumption-driven design, and real-time feedback in driving innovation in AI product design.